Space Data Centers, Humanoids & Tesla Robotaxis - are we loosing our mind?

With all the hype - are we seconds before a new industrial revolution or are we burning money in order to hope?

Now, before you start throwing rotten tomatoes at us, we’d like you to listen to a couple of points we will be going through. If you disagree, let us know. The stock market and the tech scene have been in a frenzy recently. We are witnessing not a Dotcom bubble, but something potentially bigger. The Dotcom bubble was characterized by unproven, unprofitable companies popping up around an existing, revolutionary technology (the Internet). We are stepping into new fields which are neither proven nor existing a decade ago. What we are witnessing now is the 2022 AI hype on steroids.

Actually, no, let us rephrase that.

What we are witnessing right now is the AI hype on pure white Colombian top-shelf cocaine.

To clarify, the hardware demand is tangible. The actual bubble lies in the speculative expectations of what AI applications can currently achieve.

The narrative has shifted from “AI is a useful tool” to “AI is THE engine that will solve physics, labor, and capital overnight.” At the center of this storm stands Elon Musk, a figure who has transcended the role of a CEO to become the leader of a specific techno-futurist cult. He is not only the most vocal proponent of this new industrial revolution but also stands at the forefront of the technology, whether through the orbital ambitions of SpaceX or the terrestrial automation of Tesla.

In this report, we are stripping away the marketing veneer to look at the cold, hard engineering realities. We will be going through all three major pillars mentioned in the title: Space Data Centers, Humanoids, and Robotaxis. We are specifically focusing on Elon Musk because his companies act as the bellwether for the entire sector’s rationality—or lack thereof.

Short disclaimer: We are not professional investors or the world’s best engineers. These are points made from simple observation, rigorous application of the laws of physics, and a lot of deep research.

So let us go through the content table:

Space Data Centers, what is the vision?

1.1 Orbital Computing Units

1.2 Thermal Management

1.3 Power Generation & Constraints

Humanoids, for what?

2.1 Outlook and Market: The General Purpose Fallacy

2.2 The Promises We Have Been Giving: The Wizard of Oz

Safety first - Robotaxis

3.1 If you buy cheap, you buy two times

Now that we have the table content out of the way let us get into the nitty-gritty.

1. Space Data Centers, that is Vision?

The AI bubble is so big, it reached Space. Only the vast universe can fit the AI Race now; we are re-living a Cold War era type of Science Race but with China this time.

Since Data Centers on Earth need a lot of infrastructure and vast resources, why not put them into space? We have enough space, no energy problem since the Sun is literally right there shining at us, and we could not only revolutionize Data Centers but maybe in the future save some money. Most importantly, it gets us out of Memphis, avoiding the complaints from residents who happen to live near the noisy xAI Datacenter.

Well that is a great idea, but what is actually the vision here? We have to say this not only applies to Musk but also Bezos who, with Blue Origin, is tailgating Musk with every step he is doing—basically the Temu version of the visionary kind.

Anyways let us get back to the technicals. Musk said on X “Simply scaling up Starlink V3 satellites, which have high speed laser links would work”. Well there you go, it is that easy. The idea behind this is that V3 satellites, which boast 1 Terabit per second (Tbps) downlink speeds, can provide an opportunity to offload the workload of xAI or othe AI agents.

However, we must differentiate between aggregate capacity and link capacity. While a V3 satellite might handle 1 Tbps of aggregate traffic across thousands of users, the backhaul links (laser and RF) are limited. A modern H100 GPU cluster requires petabits of inter-node bandwidth for training. A 1-4 Tbps pipe is effectively a straw for a hyperscale cluster, turning your orbital supercomputer into a high-latency brick.

If we look from an economical standpoint it could definitely work out. Since the numbers are not public regarding what a V3 Starlink costs, we can only estimate. It is probably in the lower single-digit millions. Let us say for the sake of the argument it is priced at $1.5 Million per unit—which is reasonable and well under industry standards given SpaceX’s vertical integration.

To sum the vision up: We essentially will be beaming data back and forth for a fraction of the cost we have right now—in about 5 to 15 years. With SpaceX having the capacity to do this due to cheap launches (Starship targets <$200/kg) and mass production of satellites, it might even be not so unreasonable for him or Bezos to do that, right?.

Well, hold your horses. Let’s look at the physics & econmics.

1.1 Orbital Computing Units: The Radiation Trap

Yeah yeah yeah, we get it. With rapid progress we will eventually be able to build GPUs which withstand space, and after all, there is a Space Station floating around and a lot of other computers just floating up there. But we have to make a distinctive difference here.

The ISS is similar to the computer of a plane with its Redundancy and Voting System. Critical computers are replicated (e.g., triple‑modular redundancy) so that if one gives a wrong answer, the others outvote it and the system keeps working. This system protects the ISS actively beyond material protection. But for AI, you need raw throughput, not just safety.

The most critical part we did not even talk about is pretty simple: In orbit, hardware is exposed to high‑energy particles from the Sun and cosmic rays. These effects are called “single‑event upsets” (SEUs), “single‑event latch-ups,” and cumulative dose damage. Key here is cumulative dose damage. While you can spam space with V3 satellites which are cheaply made and launched, essentially the hardware—which is already extremely expensive on earth—only gets exorbitant (pun intended) in space.

Let us just get a rough estimate because again, there are no official numbers online which is why we have to resort to such:

Compute: The best current space‑usable NVIDIA platforms (Jetson class) offer roughly 1–5% of H100/B200‑class performance per chip. The chips for space usually need to be “Rad-Hard” (Radiation Hardened). But Rad-Hard chips are typically huge, slow, and 3-5 generations behind current tech (think 28nm or 65nm process). You cannot just buy an H100 off the shelf and expect it to survive a solar storm without heavy shielding (lead/tantalum), which kills your launch cost advantage. In additon, there is currently no radiation-hardened 4nm process in existence (hence the example above). You would essentialy need to triple Modular Redundancy (running three chips to vote on one answer), which triples your mass and power requirements, destroying the economic viability.

Unit Price: Raw silicon is much cheaper than an H100/B200, but after full space qualification and integration, the total cost per usable space compute node can end up in the same order of magnitude as a single high‑end data‑center GPU, despite delivering only a few percent of the performance.

Obsolescence: Oh Moore’s law, how many dreams have you destroyed by now? The law states that computing power grows significantly every 2-3 years, which means by the time you have a fully orbital space center, it is already irrelevant.

You could argue “well let us just coat H100 Chips for space” (meaning with RAD shielding) but the physics here are a problem too. We already mentioned the latch-up problem, but just to showcase how severe that actually is: Modern AI chips use tiny transistors (4nm process nodes) to get their speed. The smaller the transistor, the more vulnerable it is to radiation. A stray cosmic ray can flip a bit (turning a 0 into a 1), causing calculations to fail, or worse, cause a “latch-up” that burns out the chip entirely.

The chip itself costs around $40,000. Prepare and launch it to space and for the sake of our argument assume it is like $80,000. That is $80,000 just going kabuuf on a single unit for a usage time that is guranteed not longer than it´d be on earth.

You do not have the same problem on the Earth we live on. The real problem is that AI is used for slop in any kind of way for free by the masses—and yes we do especially mean those unfunny videos on X or right-wing propaganda—and that AI itself is not computing efficiently, which is why that much computing power is needed. Yes, this is still a new technology, but resorting to space instead of fixing the problem on Earth is typical Musk fashion.

To sum it up - GPUs in space are a feat of engineering which we have not achieved yet and while it sounds almost too good to be true, the consequences of having giant Solar run Data Center is something so abstract that we do not even know what effects it could have on earth or space, maybe it could be a problem for future research incentives.

1.2 Thermal Management: The Thermodynamic Brick Wall

In movies, you might see things or people that are in Space freezing to death; they literally grow ice sticks out of their nose. So if we put our Data centers in space we do not need to worry about the climate on Earth anymore while we smartly use the icy cold of space - sounds like a win-win situation until it doesn’t.

It basically demonstrates a fundamental misunderstanding of thermodynamics

Let us point out the obvious: since it is space, you cannot use fans since there is no air. You also do not have water in space which just on standby for cooling your Data Center.

Okay, pretty simple so far, but how does the ISS do it then? Before we answer that we have to know what even works in space and it is basically only one answer which is radiators. The only way to get rid of heat is thermal radiation—emitting infrared light into the void. This is governed by the Stefan-Boltzmann law.

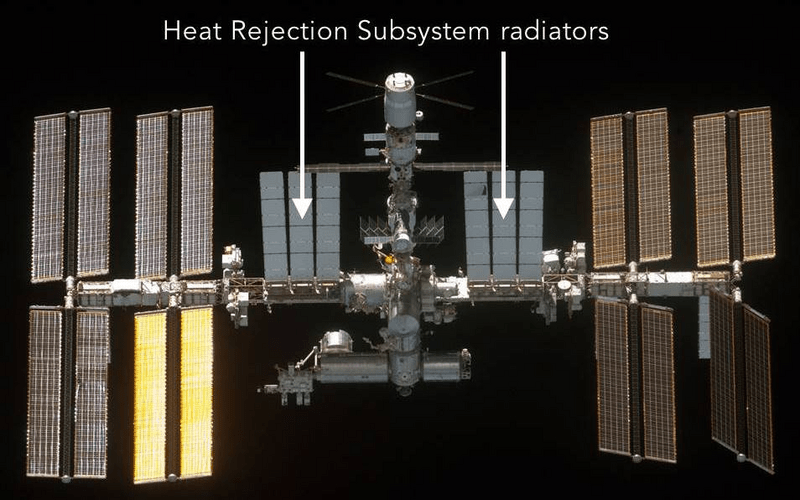

Alright then, how big can those radioters be? Well, massive. The ISS uses massive ammonia-filled radiators just to cool a tiny fraction of the power a data center uses.

Below you see a picture of the radioters on the ISS just to cool ~70 kW.

(Image by NASA)

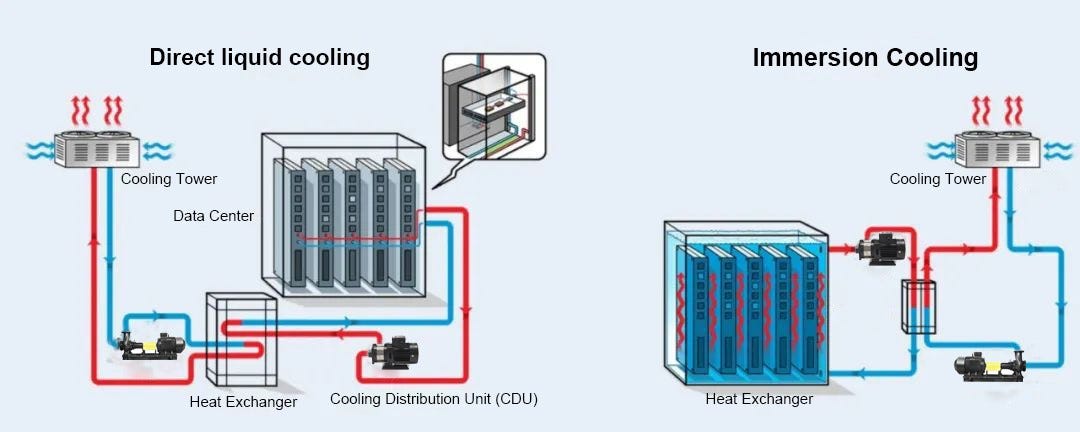

So in order for us to reject the megawatt-level heat generated by AI clusters, you need massive radiators. A study on a theoretical 1 Gigawatt orbital data center calculated it would need 3.3 square kilometers of radiator surface area. For context, that is essentially deploying a structure the size of a small village just to act as a heat sink. So maybe it was not as easy as sending some v3 Satellites in space as elon said. You are not only launching servers, you are launching giant, fragile umbrellas made of exotic materials just to keep the chips from melting. Below you see how simple cooling is on earth in comparison.

(Image from Misiler)

But wait, this is still not all there is to it but we try to keep it shorter so we can attain your attention.

1.3 Power Generation & Constraints: The Solar Cost Fallacy

You know at this point we get tired of doubting and scrutinizing this idea of data centers in space, making it sound like a future of NieR: Automata is awaiting us with hot robo-androids and stuff, but no, this is genuinely frustrating to debunk because what is the basis for this stuff? Energy is already a problem on Earth so why should it be better in space? Alien technology? Okay, this was a little exaggerated, we don't hate on visionaries and we don't hate on trying or believing, otherwise we'd still put some eau de toilette on after we finish our business… anywayssss since we are not a science magazine we can only write what is bugging us with this idea.

Energy in space—before you say "But solar power is free!" Yes, solar irradiance is high (~1366 W/m²). But capturing it and delivering it to a hungry GPU is a financial black hole. But again, in space you not only pay a premium, you also pay for the high-quality space-grade stuff which runs you more expensive than on Earth.

On Earth, silicon solar panels are cheap (~$0.03/watt). In space, you need high-efficiency Gallium Arsenide (GaAs) or multi-junction cells to survive the radiation. These cost roughly $350/watt. To build a 5 Gigawatt array in space (sufficient for a major AI cluster), you would need panels covering an area roughly the size of lower Manhattan (4km x 4km). The cost of the panels alone would be in the hundreds of billions, completely destroying the economic argument compared to plugging into the grid on Earth. Let us say no H100 chips (so less electricity) , no need for much cooling and so on we’d be still looking at 1 GW - the cost here will most definitely outweigh whatever net positive it’d bring.

Even in space, you pass through the Earth’s shadow (eclipse) unless you are in a very specific “Sun-Synchronous” orbit. That means you still need heavy batteries to keep the servers running during the dark side of the orbit, adding massive launch mass. We are not even touching the points of space debree or anything like that.

In summary, Space Data Centers try to solve a terrestrial energy constraint by introducing three worse constraints: radiation damage, impossibility of cooling, and 10,000x higher energy infrastructure costs.

2. Humanoids, for What?

All rivers lead to NieR: Automata and 2B - if they released a model like that people be lining outside of the Tesla factory like it is the release of Halo 3 but the reality is much, much more grim. Hate to break the news for you but the Humanoids we are talking about are for once totally controlled by VR as we saw on that prominent Tesla event where people actually ate that slop up.

For the other, the reason it is brought up is pretty simple: as EV growth slows and margins compress (Operating Income down 40% YoY), the promise of “unlimited labor” is being used to bolster stock valuations. The pitch is that Optimus will be a general-purpose worker, capable of doing anything a human can do. As if the whole AI thing couldn’t get better we get in the future humanoids as pharmacist running on ChatGPT giving out false drugs like candy and saying once after you died “you are totally right, these were the wrong pills” and messes up with the next dosage again as it tosses the pack of Viagra into your grave. Tesla is after all a Tech company and no car company, so let us look into this frontier of tech.

2.1 Outlook and Market: The General Purpose Fallacy

Market looks big for Humanoids, after all companies like Amazon, assembly line and things of that sort that have costly human structure that could be replaced by robots are….going to be replaced by humanoids?

Yeah, doesn't sound so good because well it isn't. Goldman Sachs and others project a massive Total Addressable Market (TAM) based on the idea that Optimus can replace human labor 1:1. However, a robot that tries to do everything often ends up doing nothing well compared to specialized machinery. Optimus is competing against specialized, perfected industrial tools, not just humans. Why try to replace a Human by a human-like robot? That is exactly the reason why we got Robots in the first place. We are getting back to the 2B maid point we said at the beginning. The critical disadvantage of humanoids compared to traditional robots is the continuous inference cost. They require expensive, real-time AI processing just to execute even the simplest tasks effectively.

After all, where you could theoretically replace humans with Humanoids, you’d need Factories which are designed for this specifically. But factories are not designed for bipeds; they are designed for the product. The most efficient way to move a box is a conveyor belt, not a biped carrying it. The most efficient way to weld is a 6-axis arm bolted to the floor, not a robot standing on two legs. This is going into the same direction like Boring company tunnels in Las Vegas: how about using a train instead?

Not saying that it is completely off in the future to have humanoids but it takes time until that technology matures and finds its real place in the world, maybe we are not ready for clankers yet. You’ll see more of that in the next point.

We are leaving the technical aspect out of this with the battery and how long it can function without being connected to the grid and so on, this is mumbo jumbo for us right now because the mere idea of integrating it into the infrastructure is not viable right now. The question with these things are how scalable are they? In demos they (including Boston Dynamics) showed that they can do repetitive tasks for maybe 2 hours. Optimus carries a 2.3 kWh battery. A human works 8 hours on a sandwich. A robot that needs a charging break every 3 hours is not a replacement for a worker but a liability.

This is for the nerds real quick, so here is the math: Tesla claims Optimus Gen 2 carries a 2.3 kWh battery and can work a full shift. Let’s apply the physics of “Cost of Transport” (CoT). Human walking is incredibly efficient (~160 Watts). Robots are not. Historical data shows bipedal robots like ASIMO or Atlas consume between 350W and 500W just to walk, without carrying loads. If Optimus runs its AI compute computers (~150W) plus sensors (~50W) plus actuators (~300W+ for walking/working), it is burning roughly 500-800 Watts continuous. With a 2.3 kWh battery, that gives you a runtime of 2.8 to 4.6 hours, not an 8-hour shift. A robot that needs a charging break every 3 hours is not a replacement for a worker; it is a liability. Sandwich it is!

Companies are trying hard to maximize energy efficiency. But for repetitive work, we could just use a traditional industrial robot instead if we are this eager to not have humans in warehouses anymore.

2.2 The Promises We Have Been Giving: The Wizard of Oz

Just to give you a sense of timeline. Elon Musk said in 2021 that the first prototype will be ready next year and then later on said that 2025 it’ll be time for them to deploy on a scalable level. There are exactly the same amount of fully functioning Optimus bots as there are Roadsters on the street.

The credibility of the program took a massive hit with the “fainting” incident. During a demo, an Optimus robot fell over. But it wasn’t a normal fall. As it fell, its hands shot up to its head in a gesture that perfectly mimicked a human pulling off a Virtual Reality (VR) headset. This strongly implies the human pilot panicked, ripped off their headset, and the robot mirrored the motion while crashing. It’s the “Wizard of Oz” moment.

3. Safety First - Robotaxis

We keep it short now: the first two are visionary work, but this one is just straight nonsense compared to the competitors.

The third pillar of the Muskian vision is the Robotaxi network. This vision relies entirely on Tesla’s Full Self‑Driving (FSD) software achieving Level 4 or Level 5 autonomy. However, 2025 has brought damaging admissions and regulatory crackdowns that undermine the whole premise.

While Tesla marketing claims FSD is “safer than a human,” the data tells a very different story once you look at what is actually going on under the hood.

In the recent Austin pilot program, Tesla Robotaxis (which still require human safety monitors) crashed roughly once every 35,000 miles.

In comparison, Waymo’s fully driverless fleet (with no human in the car) crashes only once every 476,000 miles, and a human driver crashes about once every 214,000 miles.

Before you buckle up in a Tesla Robotaxi, start praying and make sure your life insurance is up to date—especially because the odds of being in a crash, even if it’s not fatal, are higher than getting an upset stomach from a questionable sandwich at the deli (for the calculation, see this video and we highly recommend them btw)

3.1 If you buy cheap, you buy two times

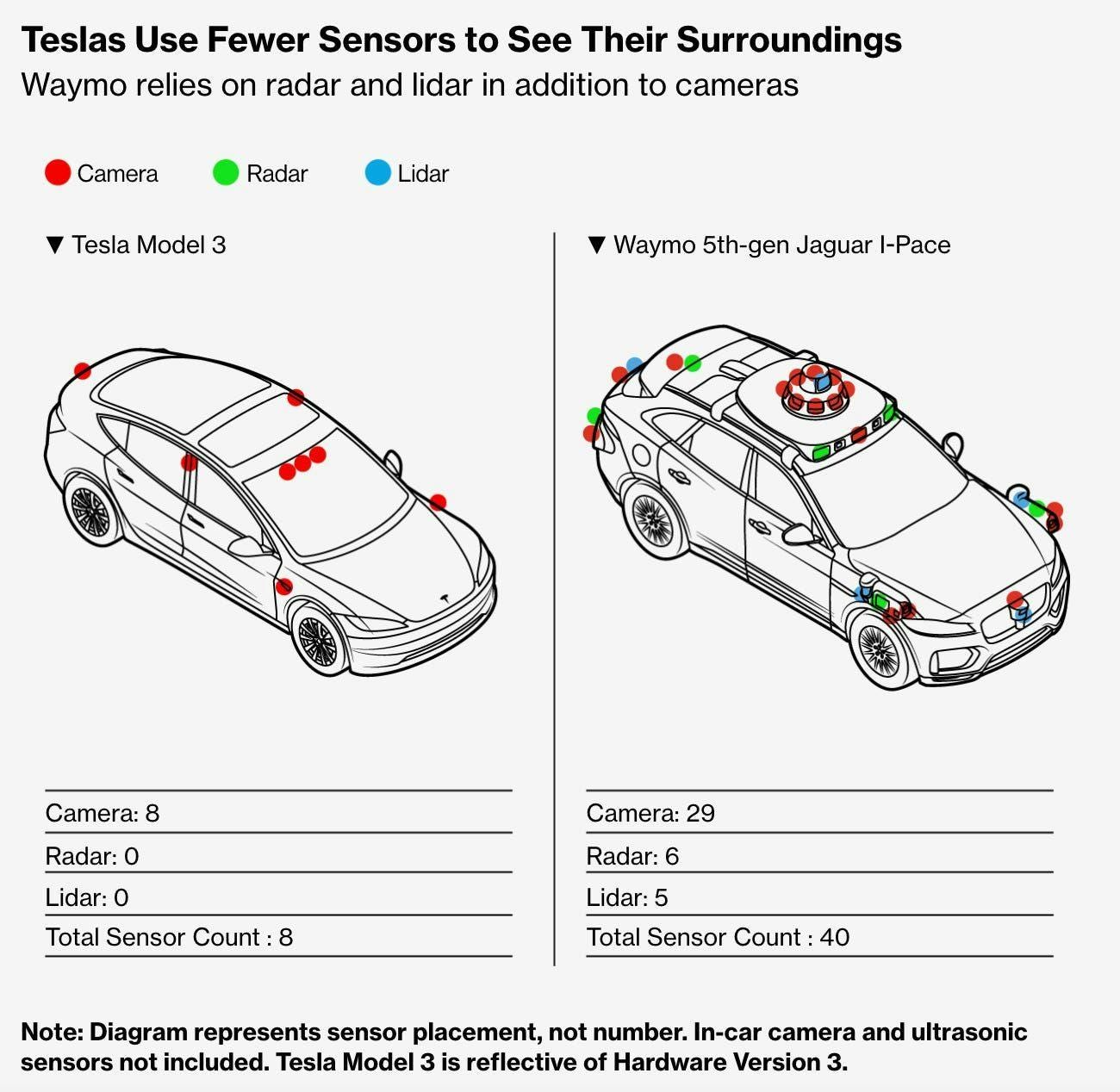

To cut prices down and scale cheaply, Tesla & Musk decided to exclusivly Camera system for their Cars instead of LiDAR like Waymo for example did. There is of course a hefty price difference

Robotaxi: Est. <$30,000

Waymo: Est. $100,000 – $150,000+

Ask any Tesla owner in Seattle or London what happens when it rains hard. The screen flashes “FSD Degraded: Cameras Blocked.” Cameras are passive sensors; they need light. Heavy rain scatter, fog, and direct sun glare blind them just like they blind a human. As we said before, Waymo uses LiDAR and Radar. Radar waves shoot right through fog and rain and provides a 3D map of the world regardless of lighting. This is why Waymo is confidently expanding testing to London, a city famous for its complex streets and miserable weather. Robotaxis expanding to the majority of europe is simply not imaginable - the reason they have patnered up with Abu Dhabi is not only bacause the UAE welcomes any western company and hype it can get it hands on but also because the weather is “optimal” (whatever that means) for camera usage.

If you need any proof let us explain real quick: This fatal flaw known as Mie Scattering. Visible light (400-700nm) scatters when it hits water droplets (fog/rain) or dust. This causes "whiteout" conditions where cameras simply cannot see.

See Below the difference between sensors and ask yourself in which one you would ride in:

(Image from Bloomberg)

We run into a Geographic Wall here.

Until Tesla puts active sensors back on the car (which contradicts Musk’s ego), the “Global Robotaxi” is effectively a “Fair-Weather Robotaxi,” restricted to the Sunbelt. You cannot run a taxi service that shuts down every time it rains. If you ever went to Hamburg, Germany - where we from you know this is a dead investment.

The race for autonomy has revealed two very different corporate strategies and in our opinion has already been won by Waymo.

Tesla insists on doing everything alone (Vertical Integration) but the base is not something to build on.

They build the car, the chip, the software, and the insurance. This sounds great for margins, until your “basis” (HW3) turns out to be faulty. Now, that vertical integration is a liability—they have to recall and retrofit millions of their own cars because they refused to use industry-standard sensors.

Waymo plays the long game.

Instead of building cars, they partnered with Zeekr (Geely) to build a purpose-made robotaxi with sliding doors and easy cleaning. They partnered with Uber to handle the demand network, instantly gaining millions of customers without spending billions on user acquisition. We are btw long UBER 0.00%↑ since they will directly profit instead of being threatened by AV.

And guess what? Waymo acquired Latent Logic, a UK AI firm specializing in “imitation learning,” years ago. This allowed them to simulate complex human behaviors (like a cyclist weaving in traffic) long before Tesla started bragging about its “neural net planner.”

Let us conclude this here in four words: The Hangover is Coming

We are witnessing a Capital Expenditure Bubble of historic proportions. Tesla is burning cash to build data centers for a Robotaxi that doesn’t work on current hardware and a Humanoid that needs a human pilot to stand up. Waymo is quietly winning the war by treating autonomy as a safety engineering problem, while Tesla treats it as a stock pumping mechanism.

We chase the sun, but let us remember the fate of Icarus.

We are not seconds before a revolution; we are likely seconds before a correction.

Thanks for reading this lengthy report and let us know what you think!

🌰 Hazelnuts Research – The search for quality at a fair price.

Not every price drop is a risk. Some are a gift – if you understand what you’re buying.

⸻

⚠️ Disclaimer

The information provided herein is for informational purposes only and also serves as a personal trading journal for the author. It represents the author’s personal analysis and opinion and does not constitute financial advice, investment recommendations, or an offer or solicitation to buy or sell any securities. The author is not a registered financial advisor, and readers should consult with their own financial advisors before making any investment decisions.

The content presented is based on publicly available information, sources believed to be reliable, and insights gathered through a combination of manual and automated analysis tools. While efforts are made to ensure accuracy, the author does not guarantee the completeness or timeliness of the information provided and assumes no responsibility or liability for any errors or omissions in the content or for any actions taken in reliance on the information presented.

Readers should be aware that investing involves risks, and past performance is not indicative of future results. The author may or may not hold positions in the companies mentioned. Any investment decisions made based on the information in this report are at the sole discretion of the reader, who assumes full responsibility for their own investment activities

I approached it from a slightly different angle, with similar conclusions.

https://nvariant.substack.com/p/is-space-based-ai-hosting-a-scam

Outstanding breakdown on the thermal bottleneck for orbital datacenters. The Stefan-Boltzmann constraint is something most hype pieces conveniently skip over, but radiative cooling at scale isn't just expensive, its geometry alone makes these systems impractical. I've seen similar overconfidence in edge deployments where power density assumtions fall apart once you account for real-world cooling limits. The camera-only robotaxi approach feels like anothr case of optimizing for demo conditions rather than operational robustness.